Multi-Agent Systems: Finite Horizons

May-2014

In our previous post, we covered the basics of what a Dec-POMDP is. Let’s actually look at what a policy is and how we can generate one.

The Example

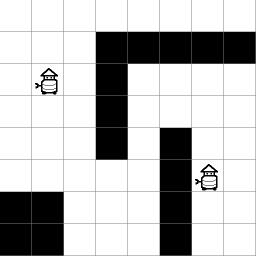

In this example, there are two robots that are trying to meet anywhere on the map. They want to do this optimally. Unfortunately, they don’t have perfect motor movement, and their sensor data is often wrong. They need to plan a way to meet up despite this.

DISCLAIMER: Everything below is pseudo (read as “non-working”) code. I will update this as I find bugs in what I have written.

Finite Horizons

If we assume that we only care about the future for N steps, one option to tackle this problem is a policy tree. A policy tree determines what actions to do in what belief states. Its root is at state 0 for the robot. If we wanted to exhaustively build policies for every action, and every observation seen at every action, the tree starts to grow quickly… very quickly… For every action and observation set, the agent needs to track the possible action/observations of the other agents as well in order to act optimally. This leads to exponential growth complexity. However, let’s ignore that for now. This is a naieve example.

Generating a Policy

A “Policy” is another word for “action plan” for an agent, it defines what the agent will do with what probabilities. In order to generate a policy, we need:

- A value function to determine what our goal should be

- A utility function to determine how close our actions are putting us toward our goal

The Value Function

In order to generate a policy for moving around using decision theory, we need a value function. It needs to let the algorithm know the rewards and dangers of different targets. If you value time, then staying still should have a negative value. If you want to reach a goal, it should have a high value. If you want to avoid a danger, it needs to have a very negative value. For this example, let’s create the following value function:

# Rewards may be based on both states and actions. def value(state, agent_actions): if state.agent1.pos == state.agent2.pos: return 100.0 else: return -0.1 |

This is going to encourage the agents to meet up as efficiently as possible. The fact that they keep getting 100 additional value every step where they are in the same space would be a problem if we were going off to infinity, as every move would result in roughly infinity value. We aren’t yet.

The Utility Function

Now that we have our value function, we need a way to determine the utility of a certain action given our current belief. Because we aren’t thinking about efficiency yet, this is going to be a recursive algorithm that basically runs for some defined horizon (Don’t forget, this grows exponentially).

Because the book I am basing this off of (Cooperative Decision Making) only talks about the two-agent example for now, I will do the same. In this example, it applies.

A utility function takes the current belief state (which is a probability distribution over the spaces on the map that the two robots may currently be) and the current policy, and determines the value of that current belief. This belief state also needs to take into account the potential policies of the other agents as well.

Below is the expansion of equation 7.1 from the book in pseudo-code:

def expected_utility(agent_nodes, current_state): utility = 0 # agent_actions is the joint action of agents 1 and 2. utility += expected_reward( current_state, agent_nodes.agent_actions) for state in current_state.sub_states: state_prob = state.probability_given( node.agent_actions, current_state) # We are assuming that POSSIBLE_OBSERVATIONS is the # set of possible joint observations between agents. for L in range(0, len(POSSIBLE_OBSERVATIONS)): for observations in itertools.combinations( POSSIBLE_OBSERVATIONS, L): # Calculate utility for each possible # set of observations. observation_prob = obs_probability( observations, node.agent_actions, state) node_utility = expected_utility( agent_nodes.children_given( observations), state) utility += state_prob * \ observation_prob * \ node_utility return utility |

Exhaustive backup

I was originally going to have this as just a part of the pseudo-code below, but I kept seeing this in the things I was reading without any sort of explanation as to what it was.

An exhaustive backup is when you take the existing set of trees generated, and you make them the “leaf nodes” of every possible option that could occur before them. It is the “step backwards” that is used in any “bottom up” approach.

Below is a rough cut of what this would look like in our world. As a note, a “joint action” is the combination of all agent actions.

def exhaustive_backup(policy): """ An exhaustive backup is the creation of a new level of nodes using the existing trees as the child nodes. """ new_policy = get_all_possible_states() # Create a new layer of all possible joint agent # states. for joint_state in new_policy: joint_actions = possible_joint_actions(joint_state) for joint_action in joint_actions: dest_states = potential_result_states state, joint_action) for dest_state in dest_states: # This is literally an array of every # possible combination of observations # for each agent given a joint action # e.g. for two agents and one observation # possiblity for each agent, there will be # four different combinations of # observations that could happen. obs_probs = get_joint_obs_probs( dest_state, state, joint_action) for obs in obs_probs: add_child_state(new_policy, policy.get_state(dest_state), joint_action, obs, dest_state.prob * obs.prob) return new_policy |

In most papers, the concept of an exhaustive backup is a “given”, but when you see it called, know a lot of stuff is going down.

The First Algorithm

The first algorithm we’ll use calculates an optimal policy tree of depth T. This is the algorithm given in “Algorithm 7.1” of Cooperative Decision Making.

def dec_dynamic_programming(max_layers=10): layer = 0 policy = [Nil] curr_utility = Nil while layer < max_layers: policy[layer + 1] = exhaustive_backup(policy[layer]) populate_expected_values(policy[layer + 1]) do: pruned_policy[layer + 1] = policy[layer + 1] for agent in get_all_agents(): # As a note, prune here is finding any # action that is completely inappropriate # under any starting state for the current agent. # also, the pruning only has to prune the new # layer, as all layers below are already optimal. pruned_policy = prune(pruned_policy[layer + 1], agent) populate_expected_values(pruned_policy) if pruned_policy[layer] == policy[layer]: break policy[layer + 1] = pruned_policy[layer + 1] while True layer += 1 return policy[layer] |

Well, That’s… Less than Practical.

Yes it is, but what it does do is give us a strong base to grow on. We now see why this is hard, we know about everything that could matter, and we can use that knowledge to assess the value of other techniques.